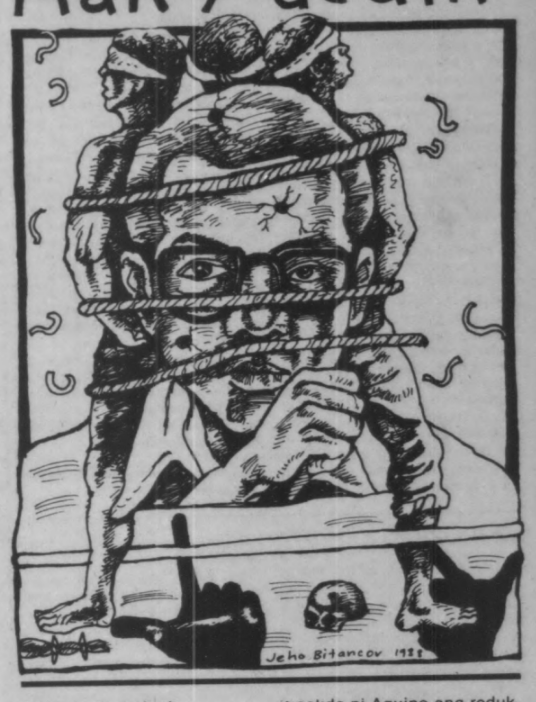

Critics love to clutch their pearls over students using artificial intelligence (AI) chatbots like ChatGPT as if it’s academic doping. But students didn’t suddenly become lazy overnight. They are instead left adapting to the reality of an overwhelming educational system with the tools available at their disposal.

As our education sector faces a crisis, unresolved and further exacerbated by years of failed leadership, some students have begun turning to AI chatbots to summarize readings, suggest ideas, and simplify concepts for academic help. Overwhelmed with unsustainable workloads, learning gaps, and a lack of institutional support, many students are driven to use AI not only for convenience but ultimately, for survival.

While critics note that other students turn to AI to avoid engaging with the learning process at all, the underlying problems inhibiting the educational system that have forced other students to turn to them as near-permanent fixtures cannot be glossed over.

The EDCOM II Year Two report released in January identified a glaring issue: Bloated curricula in the education system, especially at higher levels, have hampered depth and mastery. The report called for the need to streamline delivery by reducing workload—though notably, not by removing essential subjects, as the Department of Education has done with the revamped senior high school curriculum. After all, Filipino students endure excessive academic demands when compared to other countries in Southeast Asia, as further supported by a De La Salle University study.

And when systems fail to provide adequate support, some students turn to tools like AI chatbots. Unlike a calculator or a typewriter, however, AI chatbots are trained on vast, often biased datasets that influence how students think and learn. Relying on them risks absorbing information that may be inaccurate or submitting overly polished answers that do not reflect students’ own thinking but that of AI chatbots that lack the nuances of the topic at hand.

A recent MIT study shows that reliance on these tools “erode critical thinking.” This shows that when students depend on AI to do the intellectual heavy lifting, they risk bypassing the very cognitive processes that the education system is supposed to develop, like questioning ideas, connecting information, and forming arguments.

But in such unsustainable atmospheres, with overwhelmed educators, off-pace curricula, and insufficient resources, students are increasingly enticed to resort to AI chatbots.

Undermining the usage of these tools as mere devices of convenience overlooks how persuasive they are. It is simply not enough to tell students to self-regulate when the tool itself nudges them toward passivity and unquestioning acceptance. As crucial as it is to develop the ability to use digital technology effectively and responsibly, it is not enough without regulation of these tools themselves.

Clear rules around the use of AI in education must be laid out to mandate transparency in how these tools are used in classrooms, establish ethical guidelines, and set boundaries to prevent over-dependence. But beyond these safeguards, more lasting solutions may be found in improving the education sector to focus on learning recovery post-pandemic, improve digital access initiatives, and integrate analytical and project-based learning to foster critical thinking and reduce workload.

We all want to strengthen students’ abilities to think critically. But for that to be realized, immediate measures like AI use regulation must be exhausted, hand-in-hand with long-term and structural reforms to fix our failing education system.

The pursuit of preserving students’ critical thinking calls for complementary efforts beyond software regulation. It demands confronting the broken system that made AI chatbots seem like the only answer. ●

First published in the June 28, 2025 print issue of the Collegian.